Game Log 4 - Create

Game Log 4 - Create

Preparation for the EXPO

While preparing for the EXPO, we focused on getting the core game to work. This meant that we had to reprioritise and remove some planned features, like limiting the amount of paint a person has and refilling it at a paint bucket or removing occluding objects that could be moved by hand. Together, this also removed the incentives to move around, which we wanted to give players. This could be one reason for the lack of motion sickness, which we talk more about in the evaluation.

As our playtest only included the shader and was delayed, we had to include the complete gameplay loop and narration during the last week. During this time, we each worked on different aspects and made plenty of voice lines and 3d models. This gave the game a lot more character, in our opinion. Optimally, we would have liked to add more variety, but again, time was too short. Integrating all parts we worked on separately worked mostly without problems, so we could spend one day on preparing for the actual EXPO and tweak some numbers to make the game feel better as well as fix plenty of bugs that were still present.

For the EXPO itself, we recorded a video as a showcase of the game, but we were lucky to also get live casting, which we used as the EXPO Plaque instead. We also wanted to showcase how our game transforms reality into a romantic version, so we added an easel with a landscape picture that users should see being turned into a romanticised painting. Finally, we prepared a pitch that included how we incorporate cultural heritage and EU values to give the EXPO visitors a clear connection to our game.

Technical description and challenges

Eyecatcher and major part in development was our custom-made "Paintify"-effect with which we turn reality into an artpiece from the romantic era. There are several stages we had to go through in order to create this.

First, we needed to get access to the actual environment of the player. This is done via the recently released Meta Quest's Camera Passthrough API. This API allows reading the front-facing camera's image of the Meta Quest 3/3S as a WebcamTexture, which can later be used for the modification. There were, however, major technical difficulties that came along with this access. This is probably due to the fact that the API is made for "ML/CV pipelines", not for graphical effects such as ours. This can be seen in several points. For one, the API only allows access to the camera image of one eye, meaning that a stereoscopic effect was not possible. Secondly, the camera image has a latency of 40-60ms, meaning that the individual updates of the texture are rather noticeable. The largest issue, however, is that the camera image only fills parts of the actual viewing frustum. This means that as the player turns, the edges of the updates are always to be seen. There were two possible solutions to solve this issue; either we limit the view frustum significantly by using for example a vignette, or we keep track of the environmental data where the player has already looked and keep the full field of view. We chose the latter. We achieved this by using a cubemap, which is filled with the camera's data depending on where the user is looking. This rather simple approach of stitching multiple images together is by no means flawless, but it was enough to improve the illusion of painting the environment.

The second part of the effect is the effect itself. For this, we wrote a custom-made compute shader utilising various shader techniques. Before manipulating any pixel data, we had to do some performance optimisation. We first check whether a fragment can be seen by the player. This is done by transforming the fragment coordinates into clip space, where we calculate the normalised-device-coordinates. If those are larger than one or less than zero, we do not continue with the fragment and leave it as it is. After that, we begin manipulating the fragment. First, we use the Voronoi technique to create a semi-random noise pattern, which determines, in combination with a Signed-Distance-Function, from which point in our environment map the fragment samples the colour from. Then, depending on the sampled colour's HSV values, we choose a different brush stroke (i.e. sky blue => wide horizontal brush strokes, grass-green => short vertical strokes). Then, depending on the result, we sample different brush textures that get applied to our result.

Lastly, we had to enable the user to actually paint reality. This is achieved with another cubemap, which acts as a mask for the "Paintify"-effect. This approach is also used to allow for the objects to be painted over.

In addition to the shader, we encountered several other technical challenges. Initially, we planned on using the Logitech MX Ink pen to allow for a higher level of presence for the user. Unfortunately, this pen did not work with the new version of the Meta SDK. Since we needed the most recent one for the Passthrough API, we had to fall back to a controller instead.

Another major headache was the Quest 3's standalone performance. Since we mainly used Quest-Link for developing, where the hardware of the computer is used instead of the Quest's, this issue has caught us by surprise. This was in fact the leading driver for the current shader architecture since it runs quite well - especially in comparison to previous iterations. Because of this limitation, we had to limit the size of the cubemap textures, leading to a noticeable pixelation of the painting effect.

Evaluation and key insights

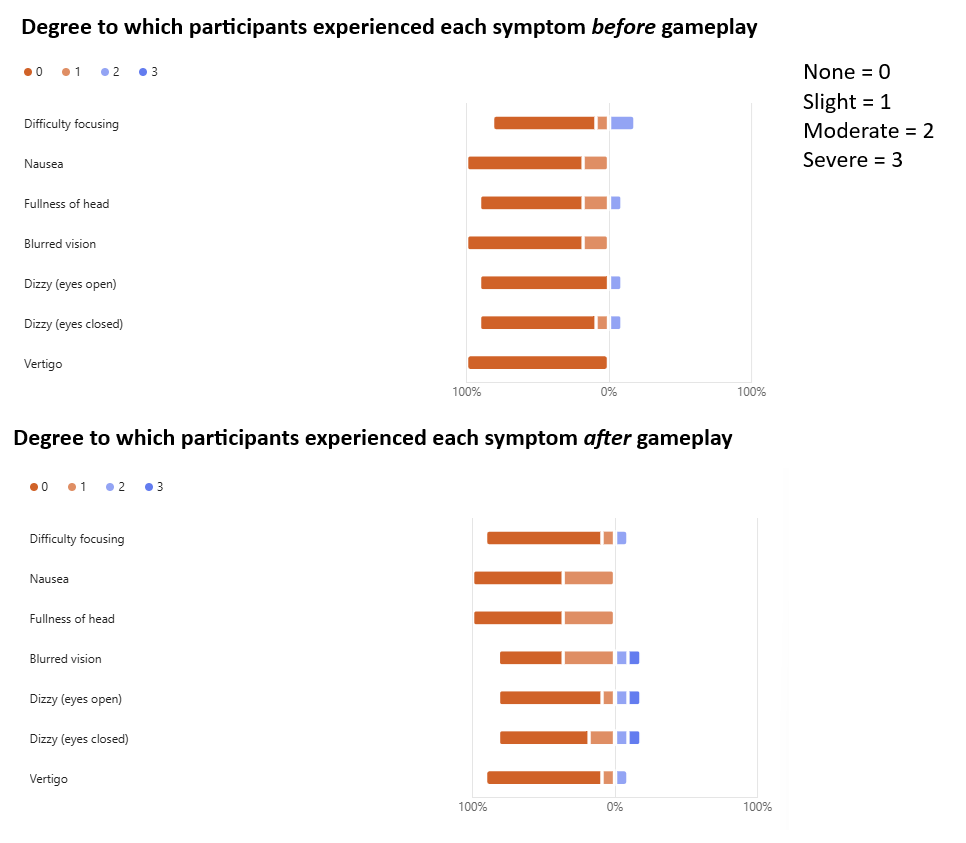

On the background of the data from the Simulator-Sickness-Questionnaire (SSQ) questions, from which we included parts into our questionnaire, we did a Wilcoxon-Signed-Rank-Test in order to learn whether induced motion sickness. Fortunately, along almost all the dimensions of the SSQ (difficulty focusing, nausea, fullness of head, dizziness (eyes open), dizziness (eyes closed), and vertigo) which we surveyed, the results show no significant difference in participants' experiences. There was however one significant difference in the aspect of "Blurred vision" between before and after gameplay, going from 81 % of participants experiencing no blurred vision before gameplay to 45% of participants experiencing no blurred vision after gameplay.

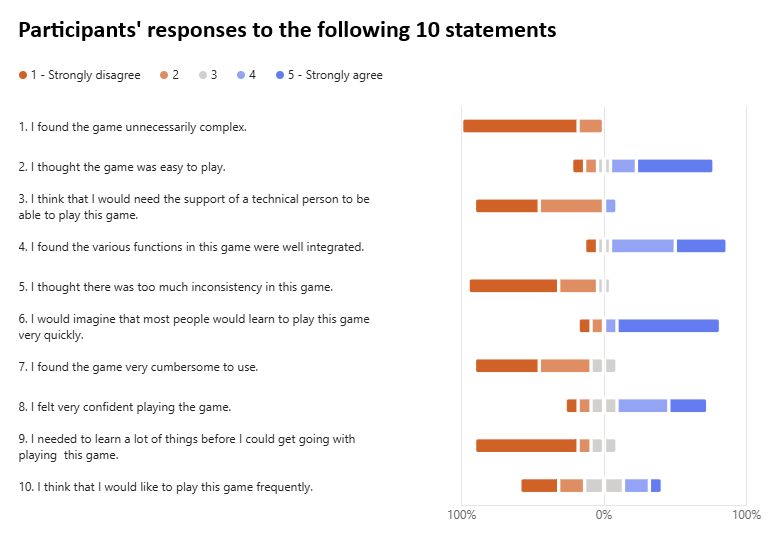

Overall, participants found the game easy to get started with and play. Results were mixed when asked if the participant felt confident playing the game, where a slight majority answered that they did feel confident. When asked if the participants would want to play this game frequently, responses were once again mixed, with a slight majority disagreeing.

Some key insights from the users at the EXPO include reports about not knowing that the goal was to start painting from the get-go. Another participant reported that sometimes they did not know if there were new things to look out for. Multiple participants liked the painting mechanic both in terms of painting reality as well as removing bad objects. 60 seconds into the game, we automatically have the game paint over anything that was unpainted before, and after the EXPO, we pondered whether that was actually a good decision, since it resulted in some participants becoming confused about what to do next.

Get Brush Hour

Brush Hour

Group project for the EPIC-WE Cultural Game Jam

| Status | Prototype |

| Author | aarandlautim |

| Genre | Role Playing |

| Languages | English |

More posts

- Game Log 3 - ImagineApr 25, 2025

- Game Log 2 - PlayApr 03, 2025

- Game Log 1 - ExperienceMar 24, 2025

Leave a comment

Log in with itch.io to leave a comment.